First caveat, this is an entirely subjective post.

I’ve had a lot of clients expressing concern over the past year about what AI is going to do to their search traffic, and whether Google is going to go the way of the dodo…err…Yahoo directory. But, despite all the hype, that doesn’t appear to be the case, not by a long shot.

Based on the data published in Search Engine Land recently, it would appear that Google’s traffic is actually still growing year to year.

Hype, hype, hype…

Let’s be honest, whenever something as potentially game changing as AI hits the market, people’s brains kind of explode. Soon no one will have jobs, every technology will become obsolete, etc. In reality, most of us have no true idea how AI will be integrated into business, existing technology, or the world at large. But, that doesn’t stop rampant speculation and a million articles about how Google and other search engines are destined for the dust bin.

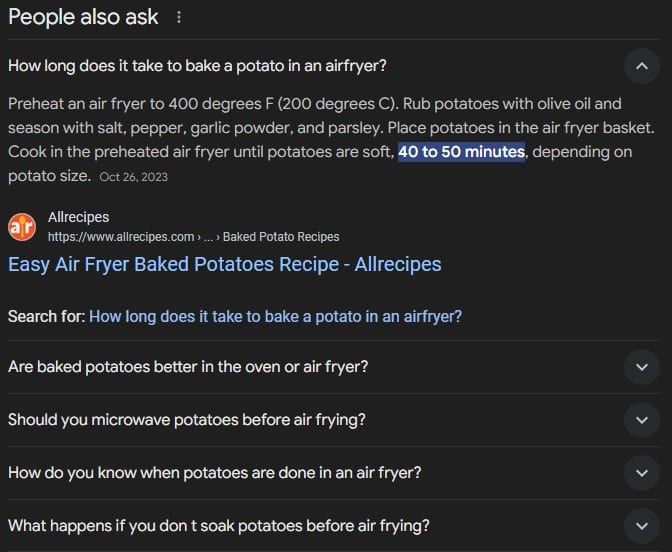

In truth, based on how many people could use AI, search engines have “kinda” been doing it for a while in the form of answer search features. Hell, if you have an Alexa, Google Home, or similar device, you simply ask a basic question like, “How long do I cook a baked potato in an airfryer?” and you’ll get an answer. Similarly, if you do that search on your phone or a computer, you’ll still get an easily accessible answer in the form of a People Also Ask search feature like so:

The trust factor

For short form answers, search engines largely give users what they want, as well as various options and easily accessible sources. When using a Google Home for example, if you ask this question, it will even begin the answer with, “According to allrecipes.com…” which is helpful as a user to vet the quality of the information. Of course, a tool like Perplexity will offer sources, but a lot of AI puts the onus on the user to vet the response. For people with even a twinge of skepticism, there’s something nice about pulling up a search and quickly scanning the results and seeing that a number of sources have the same answer rather than blindly trusting AI’s ability to suss out what’s real from what’s not.

I think we all need to remember that at this point AI doesn’t really “make” things, it’s simply a tool that aggregates, formats, and spits out existing information that it has been fed based on the commands we provide it. And, that’s the thing, humans are still required to do the legwork for the research and creative inputs to provide the data and structures from which AI works. I have no doubt that AI will continue to refine its ability to provide accurate answers, but in many instances, we live in a world that’s not black and white.

Take a subject like weed control for example. If you were to ask AI a general question like, “What’s the best way to kill weeds between sidewalk cracks?” a tool like ChatGPT will give you a list of every conceivable way it knows about how to kill weeds. It gives a lift of natural and chemical options, with a couple brief, but helpful thoughts on the pros and cons of that approach. What it doesn’t give you, however, is an in-depth answer on what truly is the best method. Now, I’m certain every method could make a case, but in truth, I would much rather take a few minutes to go through Google results and read some takes on the subject from concrete companies, landscaping experts, or watch a video of some of these methods in action – all things provided in a Google result for that search. Again, this is personal preference, but I doubt I’m alone in this instance.

AI and search engines aren’t exactly direct competitors

Speaking solely from my own experience, I use these tools largely for very different purposes. In my world, I use AI as a tool to improve or streamline tasks that I don’t intend to completely hand over to AI. From an information gathering perspective, I still prefer to do the vetting myself – though the amount of inquiries that I trust to either platform could change over time. But at this point, I use AI to assist with content generation in many instances, but I prefer to do the research and provide it trusted inputs and some of my own findings and then have it structure drafts of content that I can then revise and improve. Turning over all of those tasks to AI is a recipe for disaster in my opinion.

That said, I can’t compile a bunch of research on a topic and tell Google to structure it into a blog post based on an outline. So, at a professional level, these tools often work in unison rather than as direct competition with one another.

Of course I’m not naive enough to think that some people will even turn to AI for rather critical items like building a diet plan or a workout routine, but there’s a subsection of the world who will hand over tasks like these begrudgingly because they want to know who is providing this information to them. I still do P90x as a workout program as I approach 50 because I know the guy in the video was my age and still in great shape. That level of personal interaction helps build the trust *I* need to know that the workout can work for someone like me. AI can’t give me that reassurance…at least not yet.

Beyond that, we haven’t even discussed the zillions of other things an AI tool like Claude or ChatGPT can do beyond retrieving data. AI can create music, images, fix coding errors – the applications are too many to list, but so many have nothing to do with what search engines are designed to do.

Search is still at your fingertips

The other huge advantage search engines have is their prevalence on mobile devices. Android phones and iPhones have built-in search bars that aren’t likely to switch to AI responses any time soon. The ask and answer ease of using the search bar on your phone’s home screen is going to keep search viable and popular until someone breaks the stranglehold these tools have on the market.

Liability

Taking things a step further, if you’re Google or Apple, and someone asks a question that could have serious repercussions for their well-being, would you prefer to have your device prescribe a potentially wrong answer or provide a list of answers that forces the user to sift through options and make their own decisions? I can’t fathom someone making a decision about a medical decision or their health and getting a questionable if not just outright wrong answer from AI and being okay with it. Of course, you could provide a disclaimer, but why even have to deal with that? Google’s Search Generative Experience essentially did that, and from what I saw, many people blew by that for standard results.

Until the time comes when the general populace is so convinced that AI provides a vastly superior experience to existing search options and starts beating down Google and Apple’s doors, I just don’t see that changing.

And until it does, search isn’t really going anywhere.

Leave a Reply