Last Updated on March 22, 2018 by admin

A few weeks ago, Google quietly removed the limit to the number of times that you can submit a URL to Google’s index.

A few weeks ago, Google quietly removed the limit to the number of times that you can submit a URL to Google’s index.

Before this update, you could only submit up to 500 individual URLs to Google on a monthly basis, with 10 submissions requesting that Google also crawl additional pages on a website, using the page submitted.

This news is not all that impactful, as most site owners don’t come anywhere near those limits on a monthly basis, but it should be a reminder to the typical website owner that request indexing is a tool that can be very helpful to increase website traffic.

Why does Google allow you to submit URLs?

Whenever you make changes to existing content on your site, or if you add something new, Google will need to crawl the page to identify the changes and update its index accordingly.

This helps to expedite the process. Whether you’ve launched an entirely new website, added new pages, or Google isn’t indexing a page that it should, this gives people the option to let Google know about a particular page.

Assuming that your website gets a decent amount of traffic, Google is typically able to update its index without your help. So if you need to use this tool on a regular basis, there may be bigger issues at hand that should be addressed.

A list of Problems That Cause Indexing Issues

There are many different factors that can cause indexing issues with search engines. We’ve outlined some of the most common ones below:

1. Start with the Sitemap

Having a sitemap in place is SEO 101, and is critical for helping Google identify all the pages on your website. Sitemaps are also one of the most significant but easiest to fix SEO issues that websites encounter.

So what is a sitemap? Well, you can think of a sitemap as an easier way for Google to identify and crawl pages on your website. Instead of individually crawling each page and jumping from one page to another, a sitemap lists every page on your website in an easy to digest format for a bot. Google has a good page on what is a sitemap and why it is important.

Creating a sitemap is fairly easy and can be done for free on a number of sites like XML-Sitemaps.com or by using tools like Screaming Frog SEO Spider. If you use a commonly used CMS like WordPress, there are plugins that can assist with creating a dynamically generated sitemap.

Important – Once you create a sitemap, it needs to be submitted to Google. This is done through Google Search Console.

If you’re looking for options for creating a sitemap for your website, this is a great resource.

2. Nofollow/Noindex

Sometimes a page may not be indexed if a nofollow or noindex meta robots tag is applied to a page. This is often applied when pages are in development while being hosted on a website. The site owner doesn’t want Google to index them yet, so a nofollow or noindex tag is temporarily applied.

Sometimes a page may not be indexed if a nofollow or noindex meta robots tag is applied to a page. This is often applied when pages are in development while being hosted on a website. The site owner doesn’t want Google to index them yet, so a nofollow or noindex tag is temporarily applied.

A quick way to check to see if this is the issue for a webpage is to view the source code and search for “nofollow” or “noindex” in the code. Other tools like Screaming Frog and the Moz browser extension can also assist with checking for this.

3. Robots.txt

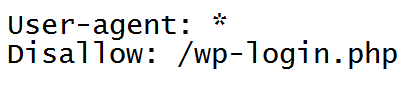

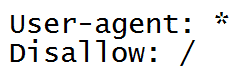

Similar to the nofollow/noindex meta tag, a website’s robots.txt file provides instructions to web crawlers on pages that they should and should not crawl once on a website.

This can be helpful for saving your website bandwidth and server resources, as well as preventing a Google bot from crawling and displaying certain pages of your website in search results – such as a CMS login page, which would look something like this:

However, if a robots.txt file is incorrectly set up, or if a crawl function is left in place when it should no longer exist, indexing issues can occur. The following robots.txt instructions will tell Google to not crawl any page on a given website:

4. Crawl Errors

It costs Google a wee bit of money every time they crawl a website, which adds up when you crawl billions of webpages on a single day. So, Google has an incentive to not crawl websites with slow server connections and broken links.

If Google starts crawling a sitemap and finds that 3 of the first five URLs submitted are broken links, they can safely assume that there are many other broken URLs in the sitemap and they will abandon the crawl.

You can find a list of crawl errors in Google Search Console. You can also run a crawler like Screaming Frog to find any broken internal links on your website.

5. Duplicate Content

Everyone knows that Google likes content, but the content has to be unique. If pages on your website use the same blocks of content, Google identifies those pages as being basically the same, which can result in Google only indexing one of the pages that displays the content.

Everyone knows that Google likes content, but the content has to be unique. If pages on your website use the same blocks of content, Google identifies those pages as being basically the same, which can result in Google only indexing one of the pages that displays the content.

We often see this issue on e-commerce websites that have numerous pages for very similar products with the same product description. It may take some time, but re-writing the content on each page so that they are unique from one another will result in those pages being indexed.

6. Manual Penalty

This one is somewhat rare, and there’s a simple way to check for the issue. Visit Google Search Console, and if there is a message stating that you have a manual penalty on your website, then this is the cause of the issue.

What does Google do? Well, it removes your entire website from its index – Meaning your site won’t show up for anything, even its brand name (ouch).

What causes a manual penalty? Too many links from spammy websites or overly-optimized anchor text links. We wrote a post on the process for removing a manual Google penalty if you have confirmed that your website is the victim of a manual penalty.

Summary

Many webmasters face issues with getting webpages into Google’s index. The best course of action is to run through the list of possible problems, spot check each potential issue, and hone in on the real problem at hand.

Photo Credit:

Palp via Wikimedia Commons [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0/)]

Leave a Reply